How to get Postgres ready for the next 100 million users

Download MP3How to get Postgres ready for the next 100 million users

===

Claire: So shout out to Carol Smith and Aaron Wislang, who are backstage and who are producing the show and who are on board to produce more of them. So the plan is these are gonna be monthly episodes. And Carol, I think, am I allowed, tell me in the private chat, am I allowed to reveal the date for the next episode?

So let's see if I can jump in there. So the date is May 3rd. Wednesday, May 3rd. Will be the next episode. We're still working out our guest invitations and the specific topic, so that will remain a mystery. And we'll shortly be adding that event to the Discord and promoting the aka.ms link for the calendar invitation.

In fact, I may have that by the end of this episode. So, let's get started. Pino, you ready?

Pino: Yes. Great. Very exciting. Let's do it.

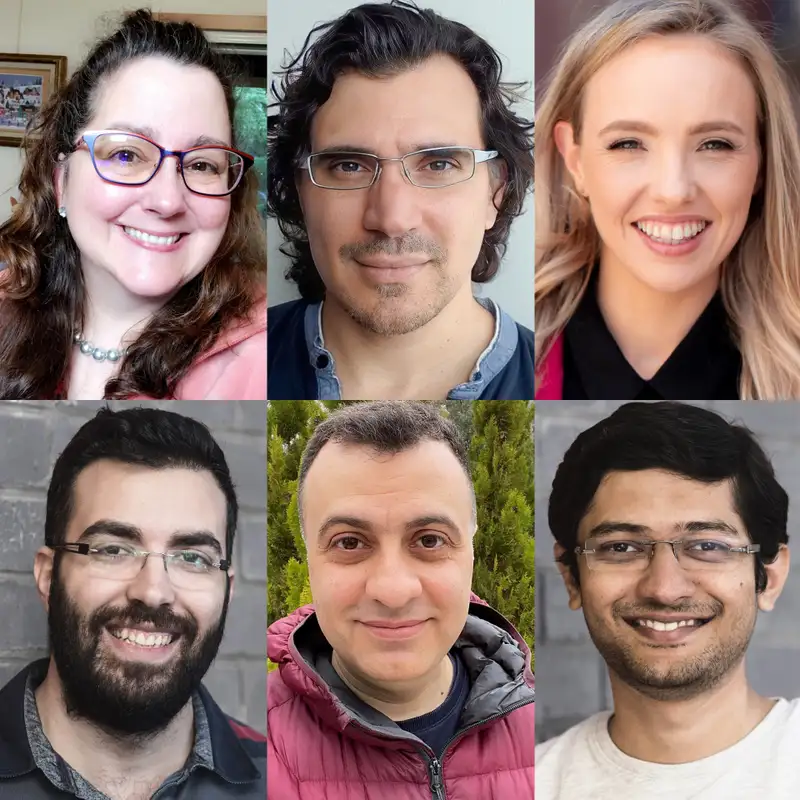

Claire: Okay, so welcome to Path To Citus Con, episode two. The topic for today is: how to get Postgres ready for the next a hundred million users. My name is Claire Giordano. I am one of the co-hosts I'm here today with Pino de Candia.

Pino: Hi everyone.

Claire: Pino is an awesome database person who is joining us from New Orleans today. And we have four guests on the show who we wanna introduce. But first I want to thank all of you who are joining in the audience. There's obviously, if you're listening to me, then you are listening to general stage.

But I wanna point out that the chat is in the #cituscon channel and specifically, it's in the path-to-cituscon-ep02 thread which has a bit more detail about the topic in that thread name, too. So there's gonna be a live chat happening there while we're having this conversation.

So we hope you can join us and I think I already did the shout out to Carol Smith and Aaron Wislang, who are backstage and are organizing this. If you have any problems with audio or any compliments or feedback, obviously shout out to them. So, and then we are recording this. And there is a code of conduct as well that you can find at aka.ms/cituscon-conduct.

But the recording will go online on YouTube in the next 24 to 48 hours, also, for anybody who joins late and wants to listen to the rest of it. So without further ado, let's welcome our guests. So the first guest I wanna welcome is Melanie Plageman, who's a senior software engineer, a Postgres contributor who is focused on IO performance and IO observability.

And she works on the Postgres Open Source team here at Microsoft.

Melanie: Hi.

Claire: Welcome. And Samay Sharma is here as well. He's an engineering leader on the Postgres Open Source team at Microsoft. And he has, in his past life, worked with a lot of customers to optimize the performance of their Postgres deployments and their Citus deployments too.

I first met Samay when he worked for Citus Data before the Microsoft acquisition.

Samay: Hi everybody.

Pino: Maybe I'll jump in here and introduce Abdullah Ustuner. Abdullah is an engineering manager for the Citus Open Source team, and he's based in Europe. Hi Abdullah.

Abdullah: Hi everyone. Happy to be here.

Pino: And we also are lucky to have Burak Yucesoy as our guest.

Burak is a principal engineer who works on the Azure Cosmos DB for PostgreSQL managed service team at Azure. It's basically Citus on Azure. Hi Burak.

Burak: Yeah, hi everyone.

Claire: And Burak is also a new dad, so I'm hoping he's not too sleep-deprived today.

Burak: No, I'm, I'm fine.

Claire: Good, good, good. It's always good to sleep.

Burak: Yeah.

Claire: So when Pino and I were chit-chatting about how to start this conversation, obviously we're looking to the future and, how to get Postgres ready for the next hundred million users. But we thought we might start by looking backwards in time and chat a little bit about what your various first versions of Postgres were when you first got involved with Postgres.

Start with you Melanie.

Melanie: Well, actually using 9.6 was my first version of Postgres that I used and I got interested in the C language extension framework. And so I don't actually remember what features of Postgres outside of being able to write extensions that I thought were really cool, but that was the first version that I used.

Claire: Awesome. Samay?

Samay: Yeah, mine was 9.2. So I my first kind of Postgres project was writing the first version of mongo_fdw as an intern. So I started working on that. So like Melanie, I also came into the ecosystem via extensions. And after that I thought it was really cool at that time that you could use Postgres to query other databases, and Postgres provided the ability to do that with foreign data wrappers.

So that got me interested. And yeah. And after that I worked on a bunch of other stuff too.

Claire: I'm very glad you became an intern at Citus and that that sealed your future with Postgres.

Samay: Yeah, it did. I didn't know I was making such a big decision at that time. But I'm happy with that decision.

Claire: What's your story, Pino? I know you're not a guest, you're a host, but I'm curious. Yeah. When did you...

Pino: Sure. So, I first got involved with PostgreSQL in mid-2020 when I joined Azure to work on on the control plane for Citus on Azure. And my database experience was pretty limited before that.

I did in 2004 through 2007, work on Amazon's Dynamo. This was not the Dynamo DB, that's the AWS service, but the Dynamo that hosted the Amazon shopping cart back then. And we wrote a paper about that. But that, so that was an early NoSQL database. And and so joining PostgreSQL was exciting for me because Citus was exciting because it's a relational database that's distributed.

Claire: Cool. What about you, Burak?

Burak: Well, to honest, I don't remember the first version that I started to use Postgres, but the first version that I worked on as a developer, about 9.4, and similar to Samay, I also started to work as Postgres with an extension, a Citus extension. And then I also kind of fall in love with the Postgres extension ecosystem.

And for a for a brief period of time, I was also maintainer of a HyperLogLog extension. So yeah, that's how I started to work Postgres.

Claire: And for people in the audience not familiar with HyperLogLog, or some of us call it HLL. Quick definition?

Burak: Yeah, so basically it introduced a new data structure which allows you to calculate count-distinct queries very fast and memory actions managed.

So normally to calculate count-distinct, you need to keep a big list of data in memory, but with HyperLogLog or HLL, if you are okay with some mathematically-bonded error rate, it can give you an approximate result in very fast fashion.

Claire: All right. And then Abdullah, just to set context, when did you first get started with Postgres? What was your first version?

Abdullah: Let's see. For me, I think it was late 2021. At the time we were building a low-code application platform and we decided to go with Postgres at the time, I think it was version 13. So compared to others, my introduction to Postgres has been relatively recent and until a couple months ago, I have been on the other side of the fence, really kind of using Postgres for our product lines.

And since February I've joined Citus team and now kind of working closer to the technology itself.

Claire: Cool. So it's actually.

Pino: Yeah. Can I interrupt you for a second? I'd love to know, get the same answer from you. I know you've been involved with Postgres for a long time and you're...

Claire: I got involved in the 9.x days as well. I think it was 2017. April, 2017. So and I had no idea how much I would enjoy being part of the Postgres community. I remember going to like my first PGConfEU with trepidation. It was in Lisbon, and I wasn't sure what to expect and I wasn't sure if it would be clubby or cliquey.

Would I be welcome, would it be inclusive? And I made friends and started to get to know people even outside of the Citus team that, you know, I was part of at that point. So yeah, 2017.

Pino: And I do have to say I dropped it in the chat. But for the audio audience, Claire was on PostgreSQL Person of the Week recently, so I dropped a link to that.

But you can also just search Claire Giordano PostgreSQL Person of the Week, which I think is a great honor.

Claire: Well, you know, Andreas Scherbaum started the Postgres Person of the Week thing back in the beginning of 2020. I think it was right before COVID hit. And he does these written interviews and there's a new Postgres Person of the Week every single week.

He's actually got quite a backlog now and he's always looking for new recommendations of new people. He's trying to be as inclusive as possible and so there's a lot of us that have been Postgres Person of the Week and it's really fun because you learn things about people in this global community that you didn't know about.

Melanie, you've done one of those, haven't you?

Melanie: Yeah, in 2020, I think.

Claire: Awesome. And I think Samay, I thought I saw an interview for you as Postgres Person of the Week too. Is that right?

Samay: Yeah, mine went out a month ago in March, I think. I think what's interesting is as part of doing the interview Andreas asks you to recommend four or five more people to interview, which I think is really cool because that kind of increases the graph of people he could interview.

So I found that to be an interesting thing.

Claire: So I think we probably should pivot to the reason for today, which is how to get Postgres to the next a hundred million users. But we did have two more things that we wanted to talk about because Postgres 16's code freeze has been just happening over the last couple of weeks, and of course you know, Postgres 15 feels like it released yesterday, although it wasn't yesterday. It was probably six months ago now. I'm just curious whether there are any recent Postgres features that any of you think are the cat's meow that you think are amazing that might be new to people in the audience. Who wants to jump in first?

Burak: I'll jump in. Like, it's not a specific feature. But I really love the improvements regarding logical replication in Postgres because I think all these improvements are setting stage for something quite big in future releases, both in Postgres and also as an extension or as a, "what you can do with Postgres."

Because, when you have a logical replication set up and if it satisfies certain pre- conditions, then you can do lots of cool stuff like bi-directional replication or zero-downtime failovers or zero-downtime upgrades. So I think it's not fully ready yet, but it will be a huge thing when all these little pieces come together and it would allow us to create and do a lot of awesome things with Postgres.

Melanie: On that note, logical decoding on physical stand-bys was merged. Now we've had the feature freeze, but not the code freeze. So I mean, still tentative what's actually in 16, but that was one of the features that's been worked on for years and that is a pretty huge step forward.

Claire: That's actually a really important clarification because we're doing this recording now.

It's gonna go up on YouTube and if someone listens to this in six months or 12 months, and the exact final contents of PG 16 changes between now and the release, which will come out when Melanie, this September. This October.

Melanie: Usually in September. Yeah.

Claire: Exactly. So we just want everyone to always go double check what's actually in PG 16 when the final release is cut.

Samay: Yeah, Melanie and Burak both combined stole my answer. So, I have to think more to give a new answer, but I think one thing which I found really cool for 15 was around the removal of the stats collector and storing stats in shared memory. I mean, I'm very passionate about giving the right data to users so they can decide how to tune their Postgres database and things like that.

So, I think just that feature allows you to actually store much more statistics. And I think Melanie greatly leveraged that to actually work on pg_stat_io for Postgres 16, which is also something which went in and I think there's room to capture more statistics in pg_stat_io and also around vacuum statistics or checkpoints, et cetera, in views and tables instead of just in logs like more statistics about these things.

So I feel that's also very cool kind of a good base for building many more features on top of.

Melanie: Yeah, the old stats collector was, it was pretty hard if you wanted to add reliable new statistics to as a feature. There's just a lot of aspects of it from an architectural perspective that would make those statistics difficult to act on.

So I think actually, that's one thing for potential contributors to think about is that the statistics subsystem now is much easier to add on top of, and there's lots of room for suggesting and proposing new types of statistics. I think just recently we had some contributors from Dalibo propose a whole new set of different types of parallel queries, statistics for 17.

So there's lots of room for that.

Pino: Thanks, Melanie. Can I chime in and remind everyone? So this live show is a pre-event for Citus Con 2023, that's happening next week on April 18th and 19th. And both Melanie and Samay are going to give talks there. And I'd love for you all to give a quick blurb about what your talk is about.

Maybe Melanie, you can start.

Melanie: Sure. I'm gonna talk about the new statistics view in 16 called pg_stat_io that I contributed. And it's just gonna be about how it gives you additional IO observability and different scenarios where you can use it to sort of understand the causes and potential solutions of different bottlenecks and performance issues in your instance.

Claire: And your talk is part of the Americas livestream, so it's actually happening... you're delivering it live next Tuesday.

Melanie: Yeah.

Claire: Cool.

Samay: Yeah. My talk, it's a recorded talk, is going to be released next week, but it's titled Optimizing Postgres for Write-Heavy Workloads featuring Checkpoint and WAL Configs.

I think the goal of that talk is, obviously there's a bunch of things you can do in the application side to kind of optimize for your write-heavy workloads. But if you are at the point where you need to squeeze more performance out of Postgres by tuning some of its parameters like max WAL size, checkpointing-related ones like WAL compression and those kinds of things, bg writer.

So it is gonna talk about all of those concepts in terms of how you can squeeze more performance out of Postgres for write-heavy workloads.

Pino: Thanks Samay. And I guess the recorded talks are going to be available at the start of the event. Is that right, Claire?

Claire: Yeah, they all get pushed to public on YouTube probably like five minutes before we start.

So everything's gonna be available to watch starting on Tuesday, except obviously for the EMEA livestream, which is on Wednesday morning, European time. Yeah, I'm so excited. All right. So I think it's time to dive into the future and how to get Postgres ready for the next hundred million users.

I think I wanna start with maybe the user-focused perspective. So Samay, you've worked with a ton of Postgres users. Are there any common challenges that people run into that you think future versions of Postgres could avoid?

Samay: So I think I'll answer this question in two parts.

One is kind of challenges they run into and how we can resolve them. And also the user profile for Postgres and for all databases in general is going to evolve over time. So I'll kind of answer that in two parts. I think from a current, I mean one meta topic instead of going into specific things is "how do you configure Postgres well?" I think that's a common challenge for users. We have a lot of configurations, which is a good thing, but it also makes it like there's not a very clear roadmap or a manual on how exactly all of them interact with each other. What data should you monitor to decide which configs to change?

So it's very common to see. You know, tuning mistakes from users. So some examples like this really is actually David pushed a patch around renaming one of the config parameters because people were using it for a completely different reason than what it was expected that what it was designed for.

Another example is, the autovacuum_max_workers where people. Like, if your autovacuum is not catching up very commonly, you'll just up the max workers thinking more parallelism is gonna help and it doesn't unless you also adjust the cost limiting parameters and other things. Work mem also, I mean , ideally everybody should read documentation and figure out how exactly to do things, but people run out of memory and then they decide, oh, "I'm running out of memory, let me increase my work mem," which actually increases your probability of running into an out of memory because that's a per-operation thing. So if you have a lot of operations and you're saying each operation can use more memory, that leads to more problems. So I feel in general, from a tuning standpoint, we have many configs and the data we expose from a stats standpoint doesn't really kind of tie you to, okay, this is what you need to change if you see this in your data.

And that's also in the documentation for the stats we use. We have quite a few statistics documented, but the, implication of it for a DB or a developer, I think we need to do a better job of that. One, I don't know if this is a sub part of this but I think like memory-related statistics are lacking, I feel.

So we do have now, like with pg_stat_io and other work, like more IO-related statistics, but it's hard to estimate how much memory a query will be using. And by extension, it's hard to actually make sure that it uses that much by setting limits and whatnot. Work mem is kind of per operation, so it's not necessarily giving you a good bound on how much a particular query can use.

So I feel these are kind of just problems which users keep facing around tuning and optimizing Postgres, which is why I give talks around this topic because I feel you can squeeze a lot more from Postgres. I'll make another small point around the changing user base, right?

So, previously, I've been working with customers for seven to eight years. And previously users used to have DBAs and folks who understood their full-time job was to maintain and manage databases. That's also very on-prem-centric view, which is over time moved to cloud and in the cloud environment where more and more users are now running Postgres on the cloud.

So it's easier to run instances or to get instances so people don't necessarily invest as much time in understanding all of these concepts, so I think that's something we as a community and as a product, and not just the product as in Postgres core, but also the managed services around it need to evolve to make it easier for such users to make the right decisions around their Postgres setup.

So yeah, that I would say is, is the main things which I feel are challenges.

Claire: Okay. That's a lot to unpack.

Melanie: I also think that defining what you mean by "the next a hundred million users" is important because, if we're talking about a hundred million users that are gonna migrate from other existing databases and are even somewhat sophisticated, but if we're just talking about numbers that's different.

Like, are we talking about people that are in college and they, for their project, are they gonna choose MySQL or Postgres? Like, that's a totally different set of problems. Like, my sister-in-law's in college and used MySQL instead of Postgres because she couldn't figure out how to deal with the pg_hba.conf.

Right, and MySQL made it easier. So I think it's really important, what kind of question we're asking. We work for cloud providers, most of the people on this panel, so we have a totally biased version of this. But, the question is, which a hundred million users are you talking about? Right.

Claire: Well, that's a really good question, and I suppose it's up to us. I mean, the fact of the matter is, if we fast forward 10 years, all those people in college now are not gonna be in college anymore. And if they're building applications, going to be choosing a database to run it on. And so I think we have to care about usability and getting started and those kinds of roadblocks that they're gonna run into, as well as the fact that people who are experienced change companies, move jobs, start companies, and they're making decisions as to which database to go to as well.

And are they gonna choose Postgres or not? And if so, why?

Samay: You bring up a very good point, Melanie. I think for users who essentially are not using cloud providers, there's still their challenges are going to be more around failover, auto failover, failure detection, all of that. Like, how do we set up HA properly how do we set up, you know, backups and restores properly.

You know, we do have some components in Postgres, but I personally would like to see more of those problems kind of solved in Postgres because there are tools around it. But there are quite a few and people often don't know which one to pick and if they pick it, they don't know which one to kind of how to use it and whatnot.

So, I think if you can solve some of those problems in core, I think in the longer term that's probably better.

Melanie: And on the open source team, we mostly hear from people that are sophisticated enough to email a mailing list, right? So there's a bunch of different biases in terms of what users we're actually serving.

Burak: Yeah, I think one similar aspect or one other way to think about this is that I think the future users will be more demanding because, like, 10 years ago the community was not that big. Like, you could attend a conference and you would meet lots of almost all key people in the community.

And when the community is smaller, I think people are usually more forgiving or they are more willing to look past from the problems they get. And I think as the community gets bigger, people will be more demanding. They will, for example, similar to what Samay said, they will want Postgres to be more easier to use or, they want HA to be set up much more easily.

And I think they will demand this in a good way because today we are boasting about how Postgres Cloud is very reliable and greatest database of all and I think that increases the expectation, and I think it is up to us as a whole Postgres community to satisfy or live up with that expectation.

Abdullah: Just to add to what Burak just mentioned, in addition to expectations, I think one pattern I have noticed, and I spend a lot of my time on the other side of the fence you know, building applications, services that actually use different database platforms. One challenge I see is: teams and engineers who get started on a project don't necessarily know all the different requirements, all the different needs they will eventually have when they get started.

You know, it may be a prototype stage, it may be an incubation stage. And what happens usually is people lean on their muscle memory based on what experience they might have with them, you know, different DB, or somebody in their team, and with limited knowledge about what they may need going down the line, it's really hard to choose, Postgres, or some other platform and how to even get started. So I think in terms of documentation may not be the right term here, but for somebody who's starting on a project, how can we guide them?

How can we make it easier for them to choose and to get started and to better understand as their needs evolve to a more advanced stage how they can start configuring and tuning their systems accordingly. I think that's going to be one of the differentiating points, I hope for Postgres.

Samay: And I think one of the things is people are, I see, you know, blogs all the time saying, using "Postgres for X," "Postgres for Y," and whatnot. And I think that ties to Burack's point of users getting more demanding because now Postgres has a very diverse user base.

Like, people are using it for queues, for Mongo replacement, for running NoSQL-like workloads for running, traditional OLTP workloads, for rollup workloads. So I think just the demands are very diverse as well, in addition to just being more demands. But they're also coming from a very diverse audience.

Claire: So, I'm trying to figure out how much of our time today we should spend thinking about those users who are just getting started, or today's college students who are tomorrow's co-founders of startups who are tomorrow's leaders even, in large organizations. So you mentioned your sister-in-law, Melanie.

Melanie: Mm-hmm.

Claire: Do you have strong opinions about things we should do in the Postgres world to make it easier for people to choose Postgres to begin with?

Melanie: I think there's a lot of things that we could do. I think some of them... just from her experience, like using MySQL, the initial experience was better.

And I think there are things that we can do, but there it's also a question of, well, how do we spend our time as developers, right? Like, are the ecosystem, the number of people working on the ecosystem and core together, it's like a pretty small number of people. If you compare it to, yes, we have a lot of contributors, but, there's actually so much work to do, right?

So the total number of people, if you compare it to, like, SQL Server or something like that, we have a lot. So we really have to prioritize. And I think one thing that Samay says pretty often is that, we could be more data-driven here in terms of how do we like: what are the different interest groups or segments that are relevant here?

Like, and what are their needs? And so how should we spend the time given that we definitely don't have infinite time? Right. So I think the example of people, like, a small technical thing that I thought of earlier was, okay, well as people's requirements change for their Postgres, running Postgres instance, they wanna change their configuration, they wanna, change various things.

And even that sometimes is not that easy. There are certain GUCs that you can't change without restarting your database, right? And that's something that is for technical reasons and something that like for some of those things we could actually change. But it's like, is that the most important thing? Or is it more important that we adjust some of the systems around like auto vacuuming and buffer pull management to make them more feedback driven or based on the workload and that kind of thing.

So, there's like so many things we could do and essentially how we choose what we work on as people contributing is pretty haphazard. Right.

Pino: So, I'm curious is the origin for where we are now that the Postgres community is focused on production workloads and being perhaps very extensible and on experts?

And, so do you see signs that will start changing direction and accommodating new user new user types?

Melanie: Well, what would be the forcing factor for that? Right? Like, we're getting paid by companies, either cloud platforms or you have to think about incentive structures, right?

Pino: So maybe the answer is that some of the communities that surround Postgres can help do some of that work, like Kubernetes integrations like Patroni or cloud services can help close the gap in difficulty. And maybe sorry, go ahead.

Melanie: I was just gonna say, even talking to authors of extensions that are in the extension ecosystem, so not so much Citus, but more, I'm giving Dalibo as an example because I've talked to them recently about this, but they maintain a dozen extensions that are used by their customers for different random things.

Looking at queries visually or query plans visually or doing things with authentication or whatever. And they have lots of things that they would like to see changed, to make their work easier. But the extension ecosystem is less centralized than the hackers mailing list or whatever.

So, I think that the other parts of the Postgres ecosystem outside of just people working on core, people requesting features that would go on core. I think they were describing that they feel like that ecosystem could be organized maybe in a different way.

Samay: And I think one thing to add to what Melanie said, Pino, is also one of the ways to solve this problem is to find ways to make it easier for newer people to contribute to Postgres.

Whether in core, whether via extensions, or by building tooling around it, as you suggested. So I think that's also one of the problems we will need to solve as a community is: how do we get more diverse people with diverse backgrounds, application developers, to bring that perspective in because maybe one of the reasons why we are where we are is because we have a very similar perspective as a community.

Claire: Okay, so let's jump into that for a second. Next on my list was to talk about what's missing from Postgres to work well in cloud services. But I'll put a pin in that question. I'll try to hold my curiosity for a little bit. And let's talk about how the Postgres community can evolve, should evolve to keep up with this growth in usage.

Like, how are we gonna increase the total number of contributions and contributors into Postgres? And I guess I wanna start with you, Melanie, because I know you have opinions on this.

Melanie: So about how we can make it easier for new contributors?

Claire: Easier, or just how are we gonna scale, right? Like, I hear stories that people will say, "oh, my patch took six months to get reviewed," and that's considered good.

Melanie: Yeah.

Claire: You know, that's fast, that's fast turnaround.

Melanie: So, I know what that's like as someone without a commit bit. So, it's like we said we were gonna talk about this and I thought about it and the problem is that I have no idea how we're gonna fix it. Like, it's really bad and every single commitfest, we talk about it and we talk about it at our team meetings and it just feels like this impossible problem.

And I think that there are, so first of all, there's technical things that we can make better and that we have been doing. So, Andres had, and several people in the community and...

Claire: Andres, Andres Freund.

Melanie: Freund, yeah. And, Andres Freund and some other people in the community like worked on having a CI system.

So you can enable this on the Cirrus CI website through GitHub, and then you can, when you have your fork or Postgres that you're developing on and you push changes to a branch, you can have it automatically run tests on a couple different platforms. And that's super helpful because a lot of times it's like all you had was, "it works on my machine," right?

And so, I'm not gonna go, I mean, maybe now, but, especially if you're just contributing a patch as a user, you're not gonna go and like try test on Windows or whatever. So, from just like a technical accessibility perspective, I think we've been trying to make changes that make it easier for users to contribute, but I think that there is just a lack of review bandwidth for existing committers and contributors that it just feels like this problem that we've been trying to solve for years and have not been able to figure out how to solve. And I don't know that there are good answers to it.

I, most of my development experience came from hands-on pair programming for eight hours a day for months with people who are experienced, like database-internals developers. So every time I see someone who's a new contributor writing a patch, I'm like, so impressed that people get off the starting line because it's pretty hard.

I mean, it's not necessarily Postgres' fault, contributing in general to a database is hard. And then, you add into it the development process being different and the Postgres community than it is for a lot of open source projects since we don't use GitHub and things like that, that people always cite.

But, I think that like one of the things that can be really hard is not necessarily getting the attention that you want for your patch, but I think that there are small things that we can do, but they're not scalable. So, for example, I hold workshops where we talk about how to draw attention to your thread, but if you propose a patch, and you get feedback a lot of times, just the whole thing of "oh, I need to come up with a minimal repro for this" so that people that are reading this email actually can try my feature and have it be like that code actually get executed. Right.

Oh. Someone asked this question, like, how do I even think about how to answer it so that the discussion moves forward? And so, I think that is the kind of thing that in my experience, the best way to do that is one-on-one pair programming. And that's obviously not scalable, right?

So, I know there are other projects that take contributions from new contributors and are able to get them over the finish line. And we do that. Every release we do that. There are contributions by people who are not paid full-time to contribute to Postgres. But, if we want to scale that, I have no idea how we're gonna do it and we spend a lot of time thinking about it.

Samay: Yeah. And I think it's a hard problem because, so as we have been discussing, there is a whole backlog of things to do. And, when the same people who can help solve that scalability, bandwidth, by giving mentorship or by helping create processes or things to make it easier for new contributors, are the same people who are responsible for making the Postgres move forward to commit patches.

So, I think balancing their time in a way where we are dedicating time for mentorship and for reviewing other's patches, committing other's patches, and also doing the tough projects, the hard projects of pushing things like direct IO, asynchronous IO, doing those come from the same pool of people.

And that makes it really hard to find ways to make progress. I mean, this is a topic I think about a lot because we have a very diverse team from a seniority level at Microsoft. We have committers. We have senior contributors like Melanie, who don't have the commit bit as she mentioned.

And we also have junior people...

Claire: Who don't, who don't have the commit bit yet. Can I say yet? Yet. Do that whole growth mindset thing here? Yes.

Samay: Yes, absolutely. And then, new people who've joined the team in the last year or so, and their challenges, the challenges of all three stakeholders if you can divide it into three, are so different that it's very hard to come up with a solution which works for all of them.

Right. So I think it's more of a people- and a process-problem. I think the technical challenges, as Melanie mentioned, we are making progress on them. The larger process problem, I think it's the harder problem to solve, but we need to solve it as a community. Otherwise, our backlog will just keep increasing and increasing.

Burak: Yeah. I'll jump in here. Because I think we can also discuss a bit on: you can contribute to Postgres with things other than code, and I think we also have a subject matter expert on this topic in this panel. Claire, so you're not one of the speakers, but I would also like to get your opinion because I know you gave a talk about contributing Postgres in ways other than code.

So I think those kind of things can also increase the adoption and overview shared.

Claire: Yeah, we're talking right now about the how to scale the code contributions, how to scale, the number of patches, the number of fixes, the number of developers. But you're absolutely right to point out that, Josh Burkus drew this wonderful pie chart once where he talked about contributions to Postgres and the code contributions to the core in particular was just a small piece of that pie.

And then there's the code development work on extensions, which are outside of the core, but are absolutely a rich part of the ecosystem of what users need to run on Postgres and production. That's just another small piece of the pie. And then there's all sorts of contributions that are really important, right?

Translations, translations of error messages just making sure users know where to file bugs so that they get attention so that they get fixed. There's all the blogs and all the training and all the videos that get created and then have to get shared, and promoted, because if you write it, people will not read it.

Like if you build it, they will not come. You need to find a way to get your learnings and your lessons out there. So yeah, there's a whole world of non-code contributions that I think are really important to the growth of any technology community and to Postgres. But I don't know that we wanna dive deeper than that here today.

Did I answer your question, Burack, or was there more that you wanted me to touch on?

Burak: Yeah, that's awesome.

Claire: Cool. So Melanie, it sounds like people in the community are talking about this at every commitfest, and it's a challenge, and there are things that are happening that people are trying to get new developers off that starting line and to contribute in each new release, but you don't have all the answers yet, is what you're saying.

Melanie: Yeah, I think the dream scenario for everyone is that people that are not paid to contribute to Postgres full-time can write patches that get committed and reviewed and considered for committing if the feature makes sense, right? Like, we don't wanna design an ecosystem where you have to work for a company and are paid to work on Postgres full-time to contribute.

And I mean, that's my opinion. But I think a lot of other contributors would agree with that. We need people who are doing it full-time because there's obviously an amount of just expertise in day-to-day reps that you need in order to be an open source project maintainer.

We need maintainers, basically. But I don't know what we do to get there, but I mean, part of it is a cultural commitment to trying to take on new , non-full-time Postgres developer contributors' projects as, your main feature for that release. You're gonna like try to shepherd that through and that person maybe will be the author, but that is your first priority.

Like, I think stuff like that is easier said than done. But I think that's hard, right? Like, if you're employed to work on Postgres full-time, then maybe having a feature that you didn't author be the thing that you work on full-time isn't as easy of a proposition, right? So.

Claire: Okay. What I'm hearing is, this is a whole other episode, right? There could be a whole conversation with a bunch of people here who are committers and contributors and talk about different ways to evolve.

Samay: I know that I spend about three hours to four hours every week having this conversation with all the stakeholders.

So, I think even an episode is going to be hard to do justice to this topic because this is a genuinely hard problem.

Pino: Yeah, that does sound like a good idea though, to revisit it. But I think if it's okay, Claire, maybe we move to that question you mentioned earlier. I was really curious to hear: what is missing from Postgres to work well in cloud services?

Burak, do you wanna start us off with that?

Burak: Sure, sure. I mean well, there's lots of things we can do or improve in Postgres, but I'll specifically mention two things. And actually, both of these things are, we name-dropped about them in our previous questions. One of them is extensions and, you know, Postgres is designed to be extensible from the very first day and today, Postgres has hundreds or even thousands of extensions because of the initial design principle.

But, unfortunately many of them are not valid maintained. So they either lack support for latest Postgres versions, or they have some security vulnerabilities. And because of that, as a cloud provider sometimes we are not willing to support new extensions. Like, one example is CosmosDB for Postgres. For a very long time, we chose to not support one of the very key extensions because we know that there's a security vulnerability in that extension, and now that is fixed and we are incorporating that into our service. But I think what I realized so far is that if the extension is not backed by a company who has some commiters it is very likely that that those extensions will be outdated because Postgres develops very fast.

And with new versions, with each version, you need to do updates or maintain your extension. This doesn't happen so easily, and because of that, extensions may stay outdated or not support the latest Postgres versions, or they might have unfixed security vulnerabilities, which reduce the adoption.

And also, even for an extension that is well-maintained today, there's no guarantee that it will stay well-maintained in the future. And that also makes cloud providers more conservative.

Claire: Okay, so before you go onto whatever's next in your list, I just wanna shine a light on what you just said about well-maintained extensions.

There are definitely extensions out there that are well-maintained that don't have a commercial entity or interest behind them. Like, I don't know. The one that comes to mind for me is t-digest by Tomas Vondra, who I think is one of the lead maintainers and is super useful for calculating percentiles and I imagine a lot of the cloud vendors support that extension.

So , that's the challenge is that you have to, as a cloud vendor, you have to decide, and there's a lot of choices about what you are going to support in your offering. And you have to be knowledgeable about what is well-maintained and what is not.

Burak: Yeah, definitely. I definitely agree that, that there are extensions out there who are well-maintained and not backed by commercial entity.

Like tdigest is one example. PostGIS is like one of the quite big and important example. And as a cloud provider you definitely need to be part of the community to know that those extensions are actually well-maintained. So, basically there is a huge extension-vetting process that we use to decide on whether we should support this extension or not in our service.

So, yeah, that's the one.

Pino: Burak, do you have any ideas about what the Postgres community do for extensions? Standards, frameworks? I know security is a problem for just generally, just having a standard for security across extensions. Someone on the chat, by the way, I wanna mention this, brought up timescale/pgspot, which has support for detecting vulnerabilities in extension scripts, which is great.

Burak: Yeah.

Pino: But yeah, go ahead.

Burak: Yeah, I think that I was going to mention the same thing. I think this is one the timescale /pgspot. It's a really good project to at least detect those vulnerabilities.

But at the end, I think it is not just specific to Postgres, but for any open source community if you are not basically all the open source community, is that, because there are lots of people who volunteer their time and effort to make things better, or to publish new things, to do the support.

But eventually, I think the open source ommunity also doesn't solve this problem, right? Cause if they're not backed by a commercial entity, like if you are a single developer doing some development on your laptop, on your own, at some point there is no reason to keep up other than you wanting to do good for the community.

I think GitHub also started, incentive program to support those open source projects. Maybe more extensions can be part of that somehow, so that the authors and maintainers would have more reason to you know, support and maintain the extensions they created. And also...

Claire: I think you're talking about the GitHub Sponsors program, I believe.

Burak: Yes. Thanks. Thanks for reminding me the name. I couldn't remember the name. That's one thing. And I think all these maintainers and authors, they really deserve to get well compensated because of the contributions they made. Because you made an extension and it might be used by lots of different companies in lots of different projects.

So I think there's this aspect of having those extensions, keeping those extensions well-maintained.

Claire: So somebody who's listening to this episode for the first time and isn't that familiar with Postgres extensions I just wanna point out that while you're focused right now, I believe on this question of which extensions should cloud vendors support and all of the choices you have to make and paying attention to which ones are well-maintained and which ones aren't.

And, there are absolutely some challenges, but I also wanna just give a big plus one to the original design and Postgres back in what it was at 1985 or something like that, and that first design doc, which had as the second or third tenant, that there will be extensibility because that's what's driven a lot of innovation.

Like Citus wouldn't exist as it is, right? This extension that enables you to use Postgres in a distributed fashion. PostGIS is probably the number one most popular tool out there for geospatial data types, and all the people with these different GIS applications, some of these extensions are hugely powerful and they've really added a lot to Postgres and they would not have become part of the core.

Right? Especially with the scaling issues around that, those development cycles. So, I just wanna say plus one for extensions. They're awesome. Even with these challenges. Do you agree or disagree with me? Burack?

Burak: Definitely. Like, I'm huge fan of extensions. Obviously, I'm biased as someone who worked on Citus and HyperLogLog extension, but yeah, a huge plus one for extensions. I really love them.

Claire: Which is not to say that everything's perfect and you know, clearly there are some challenges here.

Burak: Yeah. Yeah. Yeah.

Pino: Were there other areas that you meant to call out in terms of challenges with integrating Postgres and cloud services?

Burak: Yeah. There's one more, but this is not in terms of challenges, but I think it's something that we as a cloud provider should do.

And this is also mentioned a bit in the various questions that, Postgres has lots of knobs and settings and configurations and there's lots of them. And as a new user, people are usually clueless about how to get their, how to get best of their database. And also, there's also lots of diagnosing information in Postgres tables and the statistic views and for example, like cache hi t ratio or long-running queries or IO statistics, like lots of other things.

And I think this falls down on more of a cloud provider's responsibility than the Postgres core community, because I think Postgres core community, they provide lots of useful information, but as a cloud provider, we are usually a bit behind on using them or exposing them to customers. So one example, I know Melanie did great work on IO statistic and overall, the statistics framework.

But, at least so far we didn't utilize that in our cloud service and we definitely should. So I think this is more on the cloud service providers' part to make those information more visible to users. Like, easier to discover and easier to make decisions based upon those statistics.

And using those information to allow making changes and also even guide users to make some changes so that they can get best performance out of their database.

Melanie: Sorry.

Claire: I was just gonna say that Samay even mentioned earlier, the need to expose even more statistics, make more statistics available to users and to cloud providers.

And I'm just curious, and we can't go into this conversation today, but how many people out there are already starting to use ChatGPT, right? To try to interpret the statistics and figure out what to do? Because once you have those stats, you still have to make decisions as to how your configuration needs to change.

Samay: Yeah, I think there's like kind of three stages to this, right? The first one is just exposing the stats. The second is using those stats and making recommendations. And the third one is just doing those changes on behalf of a customer or a user, right? And I think there are improvements to be made in all three departments.

I mean, just to answer your question for a specific case, I did try ChatGPT for some explained plans and for basic explained plans, it actually gives good suggestions around creating indexes missing indexes on columns and stuff. So it was interesting. I was fascinated by that.

Melanie: There's also a lot of projects that, not related to AI, but that are trying to use machine learning to suggest and potentially alter configurations, like OtterTune and I know, Microsoft has an internal project, MSR does, to use machine learning to tune and to base and use as input, the whole fleet.

I think they're focusing on SQL Server and then some on Postgres now. But, to be able to actually come up with suggestions for tuning. And then there's of course, all those extensions that do index-advising and things like that that are not using machine learning. But, there's definitely a push for a level of auto-tuning and making suggestions, but I think that I get why that's happening in the larger ecosystem. But I think, as developers contributing to Postgres, there's a lot we could do just using, more traditional database- like concepts that were invented 20 or 30 years ago to do more adaptive optimization and tuning.

So...

Claire: Awesome. Well, I wanna say thank you, Pino and I together want to say thank you to all four of you, Melanie, Samay, Abdullah, and Burak for joining us today. I think that this was a pretty aggressive topic that we could probably talk about for hours. I know there are these other podcasters out there who do these seven-hour conversations and it, this feels like it could have been a seven-hour conversation.

But, everybody does have a day job to get back to. So, we should probably wrap.

Pino: Claire, what's this I hear about stickers being available?

Claire: Oh yeah, very important. So, anybody on the chat that's happening right now, if you would like some cool Citus elicorn stickers, the elicorn is the open source mascot for Citus.

It is part elephant, you know, in homage to the Postgres mascot, and part unicorn because Citus makes Postgres magic by scaling it out horizontally. They come in lots of colors and patterns and just tag Carol S and let her know if you would like some, and our team will work with you to get it sent off in the mail.

I think we have them for five people today, so anybody who wants stickers, go for it.

Pino: Oh, does that mean that host and guests should hang back?

Claire: So the other thing is just a reminder, May 3rd will be the next episode of Path to Citus Con on our Discord channel. You'll see calendar links soon if you wanna add it to your calendar. And next week is Citus Con: An event for Postgres with 37 talks. There are two livestreams, the Americas livestream on Tuesday Pacific time, the EMEA livestream on Wednesday, European time, and then 25 talks that drop at the start of the event. And the talks are awesome. So please, I hope y'all join us, and the virtual hallway track will be here on Discord.

Thank you. Thank you, Pino.

Pino: Thank you Claire. And thanks to all our guests.

Samay: Thanks for having us.

Bye-Bye.

Abdullah: Thank you.

Burak: Thanks all.

Creators and Guests